I’m Dmitry Volodin, a second-year M.Sc. student in Computer Science at the Southern Federal University in Rostov-on-Don, Russia. This year I was selected as a Google Summer of Code 2021 student for the Orcasound organization and I’m working on the project “Github Actions Workflows for Scheduled Algorithm Deployment“.

The purpose of this project is to create a suite of GitHub Actions workflows for scheduled algorithm deployment on data streams from Orcasound hydrophones, Ocean Observatories Initiative and possibly some other sources. Over the years the Orcasound organization has produced several algorithms that can be applied to the hydrophone data, including simple processing and spectrogram creation but also more advanced ones like neural network models for orca calls detection. But to apply these algorithms — whether they are for bioacoustic visualizations, noise assessment, or signal detection — we need easy data access and automation. That is where this project comes in!

We want to provide a simple modular interface between data streams, algorithms, and outputs in GitHub Actions. Each step will be described below, but first let me explain what GitHub Actions are.

Essentially, Github Actions are cloud-based on-demand virtual machines with tight GitHub integration. Continuous integration/deployment of software products, issues/pull requests sorting, scheduled tasks and many more automated actions are possible using GitHub Actions workflows. And the ecosystem is only growing!

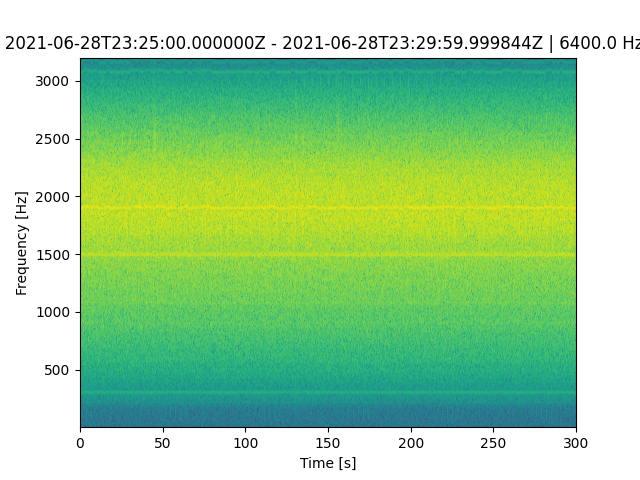

So, how would one use GitHub Actions for scheduled bioacoustic algorithm deployment? One possible workflow (that is currently implemented in the example repo) is to download all hydrophone data for, say, one whole day and then run a given algorithm on this data, saving desired outputs. This approach was used with Ocean Observatories Initiative data streams from their seafloor hydrophones. This workflow runs on a schedule every day, gets data for the previous day, then generates spectrograms for the whole day, and finally uploads these spectrograms after the run so they can be inspected.

A similar workflow was implemented for Orcasound data, but while in the OOI workflow we had to parse HTML pages and download files (currently moving this to use ooipy library) with Orcasound we have to download data from AWS buckets! Orcasound data is split into directories containing approximately 6 hours of data, these directories are named with Unix timestamps instead of human-readable dates and to make things worse, files inside directories don’t have timestamps at all (but we have playlist file with exact file lengths). The goal of this project is to hide these finer details from the outside person and provide a simple, unified interface between data providers (where possible).

Right now most of the configuration in the workflows is hardcoded, but the idea is to create a “module” (GitHub Action) for data acquisition that will accept as input parameters the desired data provider (OOI/Orcasound/etc), source hydrophone (both OOI and Orcasound have multiple hydrophones in service), start/end time of requested data, and desired output type (can be original data format, that would be .mseed for OOI and .ts/flac for Orcasound; or it can be converted to a different audio format, like .wav audio, or even visualized as spectrograms).

Then each algorithm will work with the data and save desired outputs as workflow run artifacts (right now we do this with spectrograms), save processed data to the repository if desired (you can open pull requests through GitHub Actions or even commit directly if you feel brave), or send notifications (POST requests/etc).

Next on my list is the extraction of the data acquisition step into a separate Action and working on more algorithms (we have a huge backlog already!). I’m excited for what’s to come!